Five-Year Evaluation of the Management Accountability Framework

Archived information

Archived information is provided for reference, research or recordkeeping purposes. It is not subject à to the Government of Canada Web Standards and has not been altered or updated since it was archived. Please contact us to request a format other than those available.

3 MAF Assessment

Based on a series of 23 questions identified by a group of deputy heads, we developed four key strategic questions to support our review of MAF as a management performance assessment tool, the associated methodology and administrative practices and the benefits of MAF, relative to costs. The four strategic questions are as follows:

- Is MAF meeting its objectives?

- Are MAF assessments robust?

- Is MAF cost effective?

- Is MAF governance effective?

A brief overview of the MAF process is outlined below. In the sections that follow, each of the four questions are individually addressed, including a conclusion for each strategic question.

3.1 MAF Model, Process and Cycle

The MAF is structured around 10 broad areas of management or elements: governance and strategic direction; values and ethics; people; policy and programs; citizen-focused service; risk management; stewardship; accountability; results and performance learning, innovation and change management. Figure 2 below displays the 10 MAF elements.

Figure 2 - Display full size graphic

Organizations are assessed in 21 Areas of Management (AoM); each of which have lines of evidence with associated rating criteria and definitions to facilitate an overall rating by AoM. The four-point assessment scale measures each AoM as either strong, acceptable, opportunity for improvement or attention required. The MAF Directorate, within TBS, has recently developed a logic model for MAF to clearly articulate the outcomes. The logic model is presented in Annex B to this report. It is important to note that MAF is not the sole determinant of a well-managed public service. Apart from its broad oversight role as the Management Board and Budget office, TBS oversees Expenditure Management System (EMS) renewal (whereby TBS advises Cabinet and the Department of Finance on potential reallocations based on strategic reviews to ensure alignment of spending to government priorities) and the Management Resources and Results Structure Policy (which provides a detailed whole-of-government understanding of the ongoing program spending base). The alignment of MAF to government priorities is presented in Annex C.

The MAF assessment process is performed annually by TBS based on evidence submitted from departments and agencies to support the defined quantitative and qualitative indicators within the framework. Assessments are completed by TBS representatives, including a quality assurance process to ensure results are robust, defensible, complete and accurate.

For each assessment round, all 21 AoMs are assessed for every department and agency, with the following exceptions:

- Small agencies (defined as an organization with 50 – 500 employees and an annual budget of less than $300 million) are only subject to a full MAF assessment every three years, completed on a cyclical basis. In Round VI, eight small agencies were assessed.

- Micro agencies (defined as an organization with less than 50 employees and an annual budget of less than $10 million) are subject to a questionnaire, which informs an interview with TBS senior representatives with the submission of supporting documents, as applicable. In Round VI, three micro agencies were assessed.

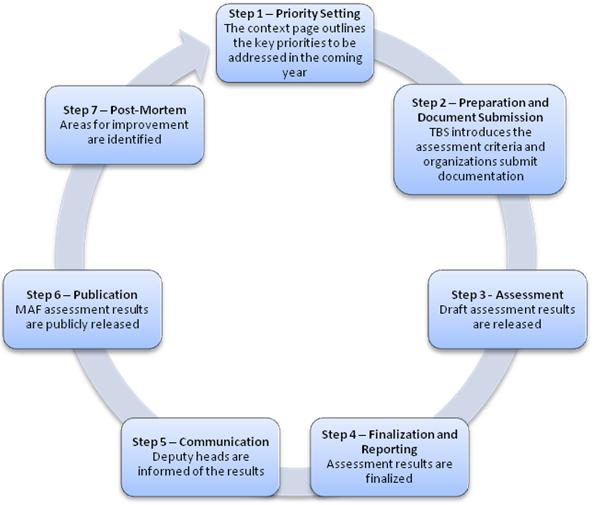

The main steps to the MAF assessment process are detailed below:

Step 1 – Priority Setting: The MAF assessment process begins with the previous round’s context page provided by TBS to the deputy head, which outlines the key priorities to be addressed in the coming year. During the year, action plans and efforts are made by the departments and agencies to address the identified deficiencies and improve managerial performance within the organization.

Step 2 – Preparation and Document Submission: In advance of the assessment round, TBS conducts awareness and training sessions to introduce changes in the assessment criteria. Once the assessment round commences and based on the documented assessment and rating criteria developed for each AoM and line of evidence, departments and agencies submit documentation to enable an assessment against the measures.

Step 3 - Assessment: The process to arrive at a preliminary assessment includes detailed analysis, reviews and approvals within the policy and program sectors of TBS. This includes vertical (a review of all AoM results for an individual department or agency) and horizontal reviews (a comparison of AoM results across all departments and agencies assessed), which are performed by senior TBS officials. Once completed, the draft results are presented at a TBS Strategy Forum session whereby the Associate Secretary, the Program Sector Assistant Secretaries and the AoM Leads review and discuss the draft results on a department by department basis. Based on the results of the Strategy Forum, the draft assessment results are released to the individual departments and agencies for feedback.

The MAF Portal

The MAF Portal is the tool used to facilitate the submission of evidence to address the lines of evidence and to ensure timely and effective communication between TBS and departmental MAF representatives. The Portal was piloted at the beginning of MAF Round V to facilitate and improve communications between TBS and departments and agencies. Since then, it has become an essential tool for both TBS and MAF respondents for executing the MAF process. The Portal facilitates the departmental submission of documents to TBS for use as evidence to support MAF assessments and ratings and enables the archiving of all documents sent electronically by departments and agencies.

TBS uses a web-based application called the MAF Assessment System, which is linked to the MAF Portal. This application has been developed and enhanced during the past three MAF assessment rounds and is used to process and archive the TBS assessment activities.

Step 4 – Finalization and Reporting: Based on feedback from the departments and agencies, the assessment results are finalized. In addition, a context page and a streamlined report are drafted to support the assessment results and identify management priorities for the upcoming year. A second TBS Strategy Forum session is conducted to provide guidance on any context pages, streamlined reports or assessment ratings and to finalize the materials and obtain consensus on the final departmental assessments.

Step 5 - Communication: The final reports are approved by the Secretary of the Treasury Board and a letter is sent by the Secretary to each deputy head informing them of the results and identifying priorities to address in the upcoming year. To provide support in the development of action plans, bilateral debriefings are scheduled between the deputy head and the Secretary for selected departments, with the Program Assistant Secretaries addressing the remaining departments. This is complemented by the Committee of Senior Officials (COSO) process, whereby the Clerk (or Deputy Clerk) of the Privy Council provides direct feedback to each deputy head regarding his/her performance, including the MAF results for his/her department. Beyond the deputy head community, to facilitate broader communication to all departmental officials involved in the MAF assessment process, departmental results are posted in the MAF portal.

Step 6 – Publication: To ensure transparency to the public, the MAF assessment results are publicly released on Treasury Board’s website.

Step 7 – Post-Mortem: Once the round concludes, the MAF Directorate launches a post-mortem with Treasury Board Portfolio (TBP) staff, management area leads, and MAF coordinators in various departments and agencies. The purpose of the post-mortems is to assess the process and methodology to identify areas for improvement to be integrated into the subsequent round. Recommendations are made with appropriate consultations of TBP staff and the MAF Directorate and may include improvements to the process and/or timelines, adjustments to management areas, the introduction of new models or methodology, or additional training. TBP’s Management Policy and Oversight Committee and the Secretary of the Treasury Board are responsible for approving any recommendations before implementation into the next round.

Figure 3 summarizes the key milestones within the MAF assessment process.

The MAF Directorate has taken a leading role in supporting MAF’s evolution and addressing concerns of stakeholders. During Round VI, the MAF Directorate facilitated a Leading Management Practices conference for departments and agencies. This forum provided TBS’ guidance on specific AoMs where horizontal results across departments and agencies were weaker, and showcased departments and agencies of various sizes who had successfully implemented best practices within individual AoMs. AoM leads have also take initiative to facilitate dialogue and efforts to address horizontal issues identified as a result of MAF by leveraging existing functional communities, including Information Technology, Internal Audit and Financial Management communities, as examples.

Other initiatives undertaken by the MAF Directorate include the development of MAF methodology and guidance documents, the management and improvement of the MAF Portal, the development of the MAF logic model and the framework for a risk-based assessment approach.

3.2 Is MAF Meeting Its Objectives?

As it has only recently been developed, we were unable to base our evaluation approach on the MAF logic model presented in Appendix B. In evaluating MAF, we identified that it has evolved from an initial “conversation” between deputy heads and TBS to a much more defined, rigorous and documented assessment. Through our interactions with TBS, we confirmed that this evolution was a conscious approach to address the increasing expectations for clear demonstration of accountability within the public sector12. As such, we determined that to be of most use, the evaluation should focus on the current objectives for MAF, as outlined in section 1.1 of this report, rather than the initial goals.

It is our view that MAF is meeting its stated objectives. MAF provides both an enterprise-wide view of management practices to departments and a view to government-wide trends and management issues for TBS. In addition, we think the 10 key elements of the MAF framework provide an effective tool to define “management” and establish the expectations for good management within a department or agency; a view shared generally by key stakeholders, and supported by our review of other models.

In our interviews and consultations, we consistently heard and observed that MAF and the associated assessment process has led to increased focus on management practices and has become the catalyst for best practices. As reflected in the MAF logic model reflected in Annex B to this report, an ultimate outcome of MAF is “continuous improvement in the quality of public management in the Federal public service.” With this goal in mind, there are opportunities for future evolution to ensure ongoing sustainability, usefulness and achievement of the expected long-term outcomes.

MAF has resulted in a number of unintended impacts, both benefits and consequences. The unintended benefits have included:

- Sharing of best practices and encouraging communities of interest;

- International recognition of Canada’s leading edge management practices;

- Increasing transparency of government; and

- The use of MAF results by deputy heads new to the department or agency, to get the initial ‘lay of the land’.

Conversely, the unintended consequences have been:

- Increased reporting burden for departments and agencies and the creation of ‘MAF response’ units;

- Ineffective resource allocation in an effort to improve the MAF scores; and

- Potential for rewarding adherence to process rather than outcomes.

1. Through formalizing expectations of management, MAF has led to increased focus on management practices, i.e., management matters. MAF is becoming a catalyst for integrating best practices into departments and agencies.

Several of the deputy heads interviewed stated that they use the AoMs to set managerial priorities and expectations with the department’s senior management team. For example, MAF has influenced performance agreements with senior management teams, whereby deputy heads include MAF results as a measure of individual performance. This has included creation of such tools as a MAF Memorandum of Understanding (MOU) or other performance agreements between the Deputy Minister and the Assistant Deputy Minister.

TBS has been able to leverage MAF results to clarify management expectations for deputy heads, provide incentives to departments and agencies to focus on management and determine enterprise-wide trends. For example, based on the results of individual organization assessments, TBS has been able to provide direction to departments and agencies for short-term priority setting. We understand that TBS has used MAF results to inform oversight activities and support intelligent risk taking. By using MAF results to target oversight in the highest risk areas, TBS can recommend and support increasing departmental autonomy for a department to innovate and take risks. Examples where MAF results currently inform oversight activities include delegations of authority, budget implementation decisions, Strategic Reviews and Treasury Board submissions.

There are opportunities to use the MAF assessment process to showcase and further provide incentives and recognize innovation across the Federal government. In 2009, a report on the Operational Efficiency Programme in the UK, recommended that, “the Cabinet Office should embed departmental capability and track record in fostering innovation and collaboration in Capability Reviews.”13

MAF has facilitated the identification of government-wide issues and the development of associated action plans. As owners of the individual AoMs, AoM leads within TBS are responsible for addressing these systemic issues that are identified through the MAF results. Enterprise trends and systemic issues identified through the AoM assessments are discussed at the Public Service Management Advisory Committee (PSMAC). PSMAC (formerly the Treasury Board Portfolio Advisory Committee (TBPAC)) serves as the focal point for integrating and ensuring coherent, comprehensive and consistent implementation of the Treasury Board’s integrated, whole of government management agenda. TBS also provides a précis to deputy heads, identifying horizontal management issues. By facilitating sharing of best practices across departments and agencies and existing common interest communities, TBS is supporting efforts to prioritize and address these issues.

2. There continues to be a place for ongoing dialogue between TBS and deputy heads during the MAF process.

“The next generation of MAF: it would interesting to sit down with TBS, where they would identify the things they are going to be looking at. Having that kind of dialogue ahead of time and setting the standard of performance for the year.” (Source: deputy head interview)

As noted above, MAF has evolved from an initial “conversation” between deputy heads and TBS to a much more defined, rigorous and documented assessment. This has allowed TBS to leverage MAF to support its oversight and compliance monitoring role relative to its policy framework.

We think that MAF has matured to the point that more emphasis could be placed on the level of conversation or engagement between TBS senior officials and deputy heads. We heard consistent concerns, across a majority of our deputy head interviews, that the “process” has overtaken the “conversation”. This results, at least in part, from the broad scope and frequency of the MAF assessments, which limits the time and ability of TBS senior managers to engage in meaningful, two-way conversations with all departments. This has led to the perception, among both larger departments, as well as smaller agencies, of TBS as an assessor rather than an enabler. To fully achieve the objectives set out for MAF and provide the expectations of managerial performance, there is an opportunity to more formally engage senior departmental representatives.

For the UK Capability Reviews, engagement at the senior level is seen as a critical part of the review. As outlined in Take-off or Tail-off? An Evaluation of the Capability Reviews Programme, the consultation and interactions between the permanent secretary and the departmental boards are honest, intense, emotional and hard-hitting, and need to occur in order to drive real change within the department.

Many deputy heads indicated that they would welcome priority setting and performance review discussions with senior executives within TBS (the Secretary or Assistant Secretaries). Of the 19 deputy heads that raised the issue of having priority setting discussions with senior TBS officials, 100% agreed that they would welcome such discussions. Regardless of stronger or weaker performance, deputy heads believed strongly that these discussions are important for the departments to address their organization’s current circumstances, communicate their priorities for the year and agree on the performance priorities and expectations.

The existing strategy in the UK for communicating results externally has been much more publicized and visible as compared to MAF, including press releases of results. While this approach has been designed to ensure the impact of the results, we do not think that there is a benefit to this approach for MAF.

- Date modified: